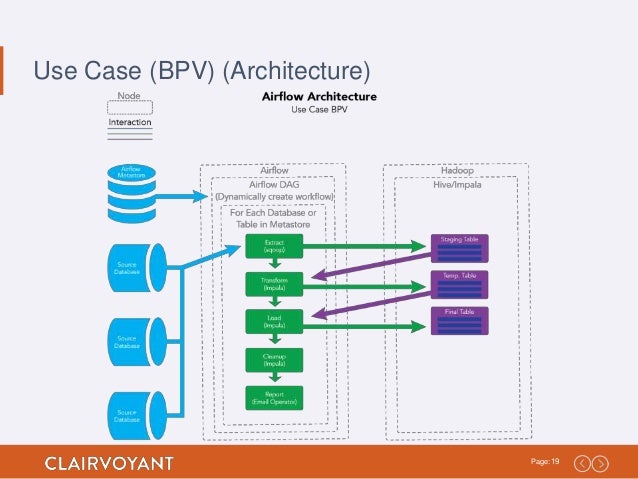

Airflow provides out-of-the-box operators to interact with popular ETL tools and allows developers to write custom code to trigger any tool Python interacts with. It’s a great tool to schedule and orchestrate batch data jobs running on various technologies into end-to-end data pipelines. If your ETL jobs do not have complex dependencies and there’s a sole desire for an end-to-end data transformation and migration solution, consider using AWS Glue.Ĭonsider using Apache Airflow if your organization has complex data pipelines with many workflow dependencies.

Think of it as an all-in-one ETL or ELT tool. It helps data engineers discover and extract data from various sources, combine them, transform them, and load them into data warehouses or data lakes. Requires separate configuration to support monitoring and loggingĪWS Glue is a fully managed data integration service from Amazon. Supports more execution frameworks since Airflow is a task facilitation framework Supports only Spark framework for implementing transformation tasks Requires installation on user-managed servers yet, there are managed solutions for seamless deployment Workflow management platform meant for orchestrating data pipelines We will reference the following throughout the article.Īll-in-one solution for everything related to data integration Before delving deeper, let’s review some key differences between these two tools that help depict their suitability for different use cases. So choosing between them depends a great deal on the specific use case. Five Differences Between AWS Glue and Apache Airflowĭespite some overlapping features, AWS Glue and Apache Airflow are very different under the hood. This article compares the two and explores why organizations choose one over the other. Yet both are designed to solve entirely different problems. AWS Glue and Apache Airflow are two such tools that offer some overlapping functionalities. Some offer overlapping functionalities, and it is often difficult to track when to use what. Many tools and frameworks exist to implement these workflows, with more emerging daily. Designing, implementing, and orchestrating extract, transform, and load (ETL) workflows is a tedious multiple-step part of this work. Data integration is not a single-step process and often involves a complex sequence of activities. Integrating data scattered across different databases or cloud services is the first step towards getting the data ready to derive business value.

0 kommentar(er)

0 kommentar(er)